Recent

Paper Accepted at ICLR 2025 (Spotlight): When do GFlowNets Learn the Right Distribution?

·256 words

I am delighted to announce that our paper, When do GFlowNets Learn the Right Distribution?, has been accepted for presentation at ICLR 2025! This work advances our theoretical understanding of Generative Flow Networks (GFlowNets) by examining the impact of balance violations on their ability to approximate target distributions and proposing a novel metric for assessing correctness.

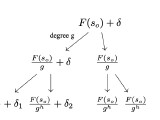

When do GFlowNets Learn the Right Distribution?

·274 words

Analysis of the limitations and stability of GFlowNets under balance violations, showing how these affect accuracy. We introduce a novel metric for assessing correctness, improving evaluation beyond existing protocols.

ICLR 2025 (Spotlight, ~top 5% 🎉)

Workshop Papers Accepted at LatinX in AI Research at NeurIPS 2024

·202 words

I am thrilled to share that two of our papers have been accepted at the LatinX in AI Research Workshop at NeurIPS 2024! These works push the boundaries in causal inference and generative modeling, contributing new methodologies and insights to the field.

Paper Accepted at NeurIPS 2024: On Divergence Measures for Training GFlowNets

·179 words

I’m excited to announce that our paper, “On Divergence Measures for Training GFlowNets,” authored by Tiago da Silva, Eliezer de Souza da Silva, and Diego Mesquita, has been accepted at NeurIPS 2024!

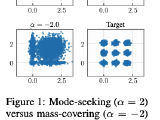

On Divergence Measures for Training GFlowNets

·277 words

Novel approach to training Generative Flow Networks (GFlowNets) by minimizing divergence measures such as Renyi-$\alpha$, Tsallis-$\alpha$, and Kullback-Leibler (KL) divergences. Stochastic gradient estimators using variance reduction techniques leads to faster and stabler training.

NeurIPS 2024 (Poster)