When do GFlowNets Learn the Right Distribution?

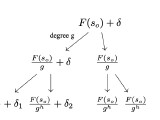

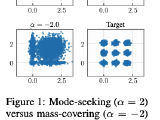

Analysis of the limitations and stability of GFlowNets under balance violations, showing how these affect accuracy. We introduce a novel metric for assessing correctness, improving evaluation beyond existing protocols.

ICLR 2025 (Spotlight, ~top 5% 🎉)