Human-in-the-Loop Causal Discovery under Latent Confounding using Ancestral GFlowNets

We introduce a causal discovery method that estimates uncertainty and refines results with expert feedback. Using generative flow networks, we sample belief-based ancestral graphs that captures latent-confounding, and iteratively reduce uncertainty through human input, with a human-in-the-loop approach.

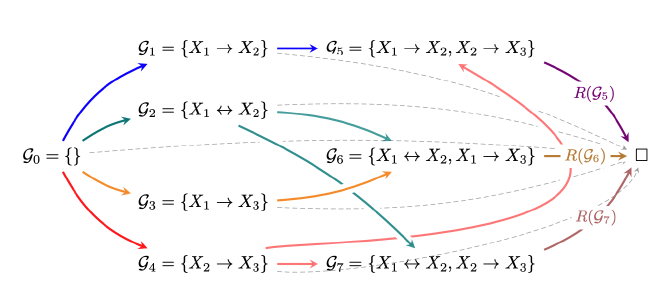

Abstract Structure learning is the crux of causal inference. Notably, causal discovery (CD) algorithms are brittle when data is scarce, possibly inferring imprecise causal relations that contradict expert knowledge – especially when considering latent confounders. To aggravate the issue, most CD methods do not provide uncertainty estimates, making it hard for users to interpret results and improve the inference process. Surprisingly, while CD is a human-centered affair, no works have focused on building methods that both 1) output uncertainty estimates that can be verified by experts and 2) interact with those experts to iteratively refine CD. To solve these issues, we start by proposing to sample (causal) ancestral graphs proportionally to a belief distribution based on a score function, such as the Bayesian information criterion (BIC), using generative flow networks. Then, we leverage the diversity in candidate graphs and introduce an optimal experimental design to iteratively probe the expert about the relations among variables, effectively reducing the uncertainty of our belief over ancestral graphs. Finally, we update our samples to incorporate human feedback via importance sampling. Importantly, our method does not require causal sufficiency (i.e., unobserved confounders may exist). Experiments with synthetic observational data show that our method can accurately sample from distributions over ancestral graphs and that we can greatly improve inference quality with human aid.

Under review

- Tiago da Silva, Eliezer de Souza da Silva, Adèle Ribeiro, António Góis, Dominik Heider, Samuel Kaski, Diego Mesquita. Human-in-the-Loop Causal Discovery under Latent Confounding using Ancestral GFlowNets. Preprint, ArXiv:2309.12032, September 2023.