Prior Specification for Bayesian Matrix Factorization via Prior Predictive Matching

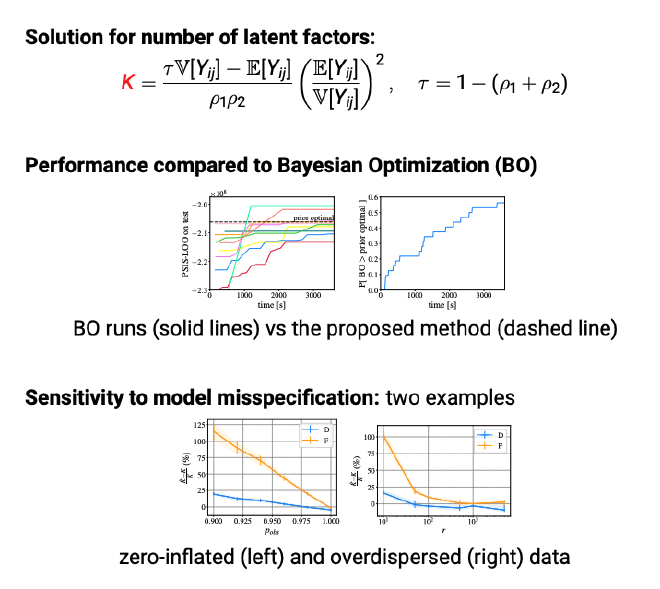

A method for prior specification by optimizing hyperparameters via the prior predictive distribution. This approach matches virtual statistics generated by the prior to certain target values. We apply it to Bayesian matrix factorization models, obtaining a close-formula for the rank of the latent variables, and analytically determine the matching hyperparameters, and extend it to general models through stochastic optimization.

JMLR 2023

ICML 2024 (Poster, Journal Track)

Abstract The behavior of many Bayesian models used in machine learning critically depends on the choice of prior distributions, controlled by some hyperparameters typically selected through Bayesian optimization or cross-validation. This requires repeated, costly, posterior inference. We provide an alternative for selecting good priors without carrying out posterior inference, building on the prior predictive distribution that marginalizes the model parameters. We estimate virtual statistics for data generated by the prior predictive distribution and then optimize over the hyperparameters to learn those for which the virtual statistics match the target values provided by the user or estimated from (a subset of) the observed data. We apply the principle for probabilistic matrix factorization, for which good solutions for prior selection have been missing. We show that for Poisson factorization models we can analytically determine the hyperparameters, including the number of factors, that best replicate the target statistics, and we empirically study the sensitivity of the approach for the model mismatch. We also present a model-independent procedure that determines the hyperparameters for general models by stochastic optimization and demonstrate this extension in the context of hierarchical matrix factorization models.

Publication

- Eliezer de Souza da Silva, Tomasz Kuśmierczyk, Marcelo Hartmann, and Arto Klami. Prior specification for Bayesian matrix factorization via prior predictive matching. Journal of Machine Learning Research 24, 1, Article 67,January 2023, 51 pages.

Additional material

- GitHub Code

- Bibtex Citation

- ICML 2024 poster presentation page (special track for JMLR papers)

Interesting follow-up works (not done by me)

- Bockting, F., Radev, S.T. & Bürkner, PC. Simulation-based prior knowledge elicitation for parametric Bayesian models. Scientific Reports 14, 17330. 2024. https://doi.org/10.1038/s41598-024-68090-7

- Project Website: https://florence-bockting.github.io/PriorLearning/

- Manderson, Andrew A., and Goudie, Robert JB . Translating predictive distributions into informative priors. arXiv preprint arXiv:2303.08528, 2023.