Analyzing GFlowNets: Stability, Expressiveness, and Assessment

How balance violations impact the learned distribution, motivating an weighted balance loss to improve training. For graph distributions, there are scenarios where balance is unattainable, and richer embeddings of children’s states is needed enhance expressiveness. To measure of distributional correctness in GFN we introduce a provable correct novel assessment metric.

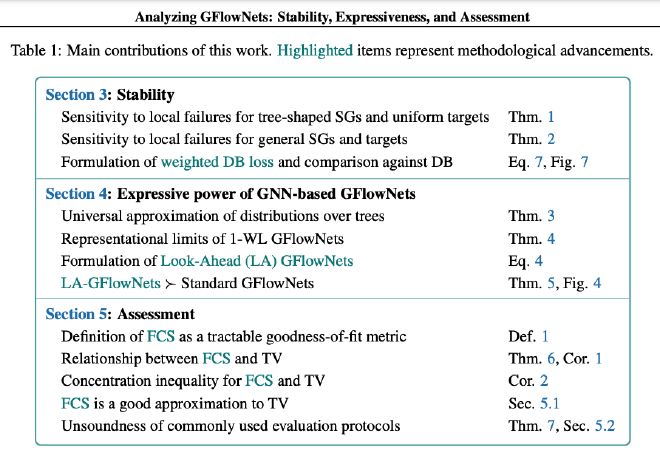

Abstract Generative Flow Networks (GFlowNets) are powerful samplers for distributions over compositional objects (e.g., graphs). In this work, we analyze GFlowNets from three fundamental perspectives: stability, expressiveness, and assessment.

For stability, we analyze how fluctuations in balance conditions impact the accuracy of GFlowNets. Our theoretical results suggest that i) the effect of balance violations is heterogeneous across the state graph and ii) each node’s influence on GFlowNet’s accuracy is tied to the reward associated with its descendants. We leverage these insights to propose a weighted balance loss that leads to faster training convergence.

Regarding expressiveness, we consider GFlowNets for graph generation. We prove that, given a suitable state graph, GFlowNets can accurately learn any distribution supported over trees. Strikingly, however, we show simple combinations of state graphs and reward functions that cause GFlowNets to fail, i.e., for which balance is unattainable. We propose leveraging embeddings of children’s states to circumvent this limitation and thus increase the expressiveness of GFlowNets, provably.

Lastly, we propose a theoretically sound and computationally tractable metric for assessing GFlowNets. We experimentally show it is a better proxy for distributional correctness than popular evaluation protocols.

Publication

- Tiago da Silva, Eliezer de Souza da Silva, Rodrigo Barreto Alves, Luiz Max Carvalho, Amauri H Souza, Samuel Kaski, Vikas Garg, Diego Mesquita. Analyzing GFlowNets: Stability, Expressiveness, and Assessment. Preprint (non-archival), accepted at ICML 2024 Workshop on Structured Probabilistic Inference & Generative Modeling (SPIGM@ICML), July 2024. (https://openreview.net/forum?id=B8KXmXFiFj)

Extras