On Divergence Measures for Training GFlowNets

·277 words

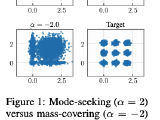

Novel approach to training Generative Flow Networks (GFlowNets) by minimizing divergence measures such as Renyi-$\alpha$, Tsallis-$\alpha$, and Kullback-Leibler (KL) divergences. Stochastic gradient estimators using variance reduction techniques leads to faster and stabler training.

NeurIPS 2024 (Poster)